Next-Gen Data Centers Bring the Digital Edge to the Data

by Noah Woodburn, Chief Cloud Correspondent

Data, workloads, storage, none of them created equal. In an effort to address the evolving trends in the cloud computing space and to help break down the different business drivers for each, we've interviewed IT managers and cited many recent industry studies to try to map out each scenario. Here we try to figure out where to put data and the best method to access it is at the heart of what matters most to IT professionals and CIOs from every industry. The need to undergo a "digital transformation" is causing a massive shift, one that’s forcing companies to rethink their cloud and colo strategies. Tony Bishop of Digital Realty estimates that 70% of enterprises are going to entertain digital transformation initiatives this coming year, while only 21% feel that they have completely finished their business' digital transformation. If you're part of the 70% that are entertaining a DX initiative this year, know that 1) you're not alone and that 2) we're here to help.

Digital transformation is creating both a opportunities and challenges for IT teams all over the world. Done right, DX (digital transformation) can turn a company's digital infrastructure into a competitive weapon by expanding past the weaknesses of old-school data center infrastructure. Making modifications to IT environments incurs cost, requires time investment, and demands new skills. We also know that power and cooling become an issue with any sizable scale. Colocation serves as a powerful opportunity to re-architect towards a decentralized infrastructure, giving critical access to carriers, providers and networks with turnkey solutions like SD-WAN that can be geared for future scaling, putting IT at the helm to successfully lead the charge towards digital transformation.

The challenges of traditional on-premises data centers

On-premises data centers, also referred to as private data infrastructure, are servers that companies keep in-house. These tend to be expensive to design and to manage both in terms of operational expenditures (OpEx) and capital expenditures (CapEx). However, that doesn’t translate to companies ditching their on-prem data center environments. In fact, most companies Digital Realty studied are choosing a robust mix of colo, cloud, and on-premise, understanding that not all workloads belong in the public cloud. A report recently released by Data Center Knowledge indicates rapidly growing demand for data centers, where it cites the average number of data centers per company growing from 12 to 17 over the next three years. You read that right: 17 per company. For IT leaders, one key challenge will be to seek out Trusted Advisors who can truly guide them through the complexities—and often hard-to-find IT skill sets— of digital transformation.

As a result of this growth in hybrid hosting (a cost center already under financial and operational pressures) data center capital and operational costs are expected to continue upwards. With costs rising and demands increasing, so does the need for training and developing IT team skillsets. And while the demand for more a more technical workforce continues to increase, it seems the supply remains stagnant. In fact, according to a recent survey by AFCOM entitled "The State of the Data Center", four in five companies stated that they are struggling to fill roles across IT security, DevOps, IT systems, cloud architects, and data center facility technicians, operators, or engineers.

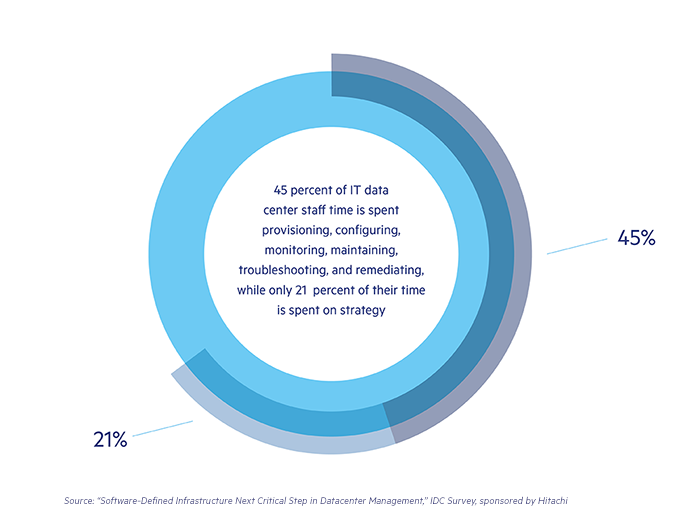

IT teams that direct most workloads to on-premises data centers often find themselves spending substantial cycles dealing with day-to-day data center management activities. We found an IDC study (graphic below) that finds that upwards of 45 percent of IT data center staff time is spent on what can only be classified as functional overhead: provisioning, configuring, monitoring, maintaining, troubleshooting, and remediation, with only 21 percent of their time is spent on strategy.

IT’s seemingly endless struggle to end the logjam

IT teams often find themselves at a logjam and unable to focus on business-enabling initiatives. Business leaders want their IT teams and leaders spearheading initiatives such as mobility, cloud adoption, IoT (Internet of Things) and other initiatives that transform customer experiences (CX) and drive revenue. But with the lack of time and resources, IT managers struggle to have their teams expend the time it takes to takes these strategic directives. And if they can find time to work on new business initiatives, they often add more costs as well as constraints on the data center.

Historically, adding more floor space and server and storage racks is what IT teams did to meet new business demands. But the exponential demand for more data center resources and power makes it difficult to do so. Our definition of a data center ecosystem has also evolved to go well beyond buildings that offer space and power. Data centers must be seen as innovation and technology partners.

Emergence of third-party colocation data centers

Instead of expanding existing data centers or constructing new ones, third-party colocation has become a critical part of many IT strategies. These outsourced infrastructure extensions have been around for 20 years. Gartner predicts that 80 percent of enterprises will have shut down their traditional data centers by 2025 (compared to 10 percent today)—either migrating workloads to colocation data centers or to the public cloud.

But the dynamics of colocation data centers are much different today than a few years ago. First-generation colocation data centers simply provided businesses with racks and space for servers and storage systems and were largely used by small to midsize businesses.

Second generation introduced capabilities of fit for purpose solutions conceptually borrowed from wholesale datacenters, enabling businesses of all sizes to defray the cost of building and maintaining a data center with state-of-the-art technologies.

Third generation colocation addresses the shift to data centric architectures that require distributed data hosting, connectivity and large-scale computing to be accommodated in a single datacenter campus. They also enable businesses to embrace cloud capabilities—both private and public.

Colocation providers supply the building, cooling, power, bandwidth, and physical security. They typically lease by the rack, cabinet, cage, or room but maintain ownership of hardware and software settings. By coordinating with the colocation provider, end customers can quickly and easily upgrade and downgrade space requirements and network bandwidth. The result is that the customer does not pay for space and network bandwidth that they do not need, which is often the case with on-premises data centers. More advanced colocation providers even include on-demand and scheduled services, which end customers can use for deployment, patching, incident response and event management support, and more.

In Digital Transformation, Cloud Isn’t Always the Answer

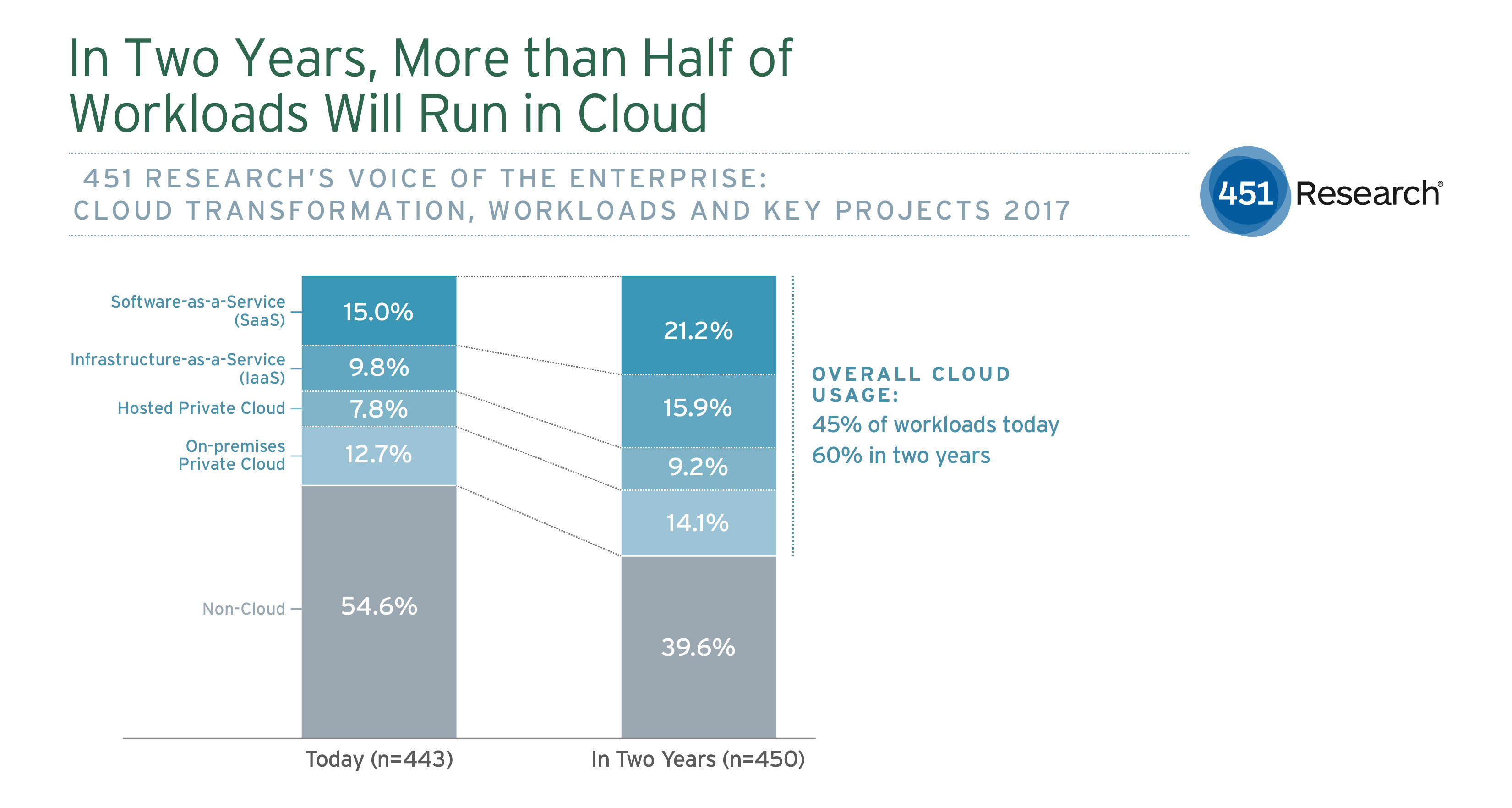

Cloud has certainly been a resonating topic for many technology and business leaders. When it comes to cloud deployment specifically, over the next 12 months respondents are by far most likely to implement a private cloud (73 percent), followed by public (64 percent) and hybrid models (59 percent).

An interesting trend was that 71 percent of JLL respondents said they saw organizations moving away from public cloud and looking to colo or private data centers, including 23 percent who said this happens “constantly.” In our previous study, we saw more than 58 percent say they’re seeing this consistently or occasionally.

A key point here is that cloud is not replacing the data center. Rather, it complements data center solutions.

Business advantages of colocation

In many ways, today’s third-generation colocation solutions are akin to on-demand Software-as-a-Service (SaaS) models. On-premises data centers inhibit scalability and incur significant CapEx costs—such as the building, cooling systems, and racks—and OpEx costs—such as building and maintaining the equipment and providing physical security. These responsibilities and associated costs are moved to the colocation provider.

In addition to the cost advantages, colocation affords businesses with the springboard to more easily and quickly embrace the cloud and edge computing, including edge computing and Internet-of-Things (IoT) devices. Cloud adoption is rapidly growing. Eighty-six percent of enterprises deploy a multi-cloud strategy, with 60 percent having moved mission-critical applications to the public cloud. When it comes to the network edge, market adoption is still in early phases, but that is quickly changing. As more data and applications get pushed to the network edge and more users and network traffic extend to the edge, performance challenges increase. And this directly impacts user experience and even revenue. For example, Amazon finds that every 100 milliseconds of latency translate into a one percent reduction in sales.

One of the most interesting edge computing phenomena today is IoT (Internet of Things), which is exploding—experiencing a compound annual growth rate (CAGR) of 34 percent. Gartner predicts there will be 20.4 billion IoT devices by next year—a number that is expected to hit 64 billion by 2025.

Cost-effective private clouds

For private cloud services, colocation is a flexible, cost-efficient alternative to an on-premises data center. Security and regulatory compliance—as related to the physical environment— is overseen by the colocation provider, reducing the complexity of securing your IT environment. At the same time, with an on-demand infrastructure, the private cloud services only consume racks and space that is needed, and moreover IT leaders can scale data center infrastructure up and down as workloads dictate. Consequently, in contrast to on-premises data centers, IT leaders only pay for infrastructure that is needed rather than paying for idle data center space—not to mention the accompanying power consumption.

Low latency, fully optimized public clouds

Colocation also offers public cloud advantages. Performance is the top reason IT leaders cite for moving applications to the cloud. It ranks ahead of compliance and security as well as resiliency and availability. On-premises data centers lock organizations into a fixed location, which creates latency when pushing data between the data center and public clouds. Colocation solutions allow them to place applications and data closer to the public clouds; this reduces latency.

With the workload volumes of data analytics and artificial intelligence (AI), the issue of proximity of applications and data to public clouds—in addition to bandwidth performance—is crucial. Connecting applications and data requires a rethink of the traditional data center, and colocation solutions can offer path forward for organizations seeking to harness the full capabilities of the interconnected digital age. Organizations can distribute their digital infrastructure around the globe to meet local business requirements, while remaining in control and maintaining confidence in meeting resiliency, compliance, and security mandates.

Enter Multi Cloud and Hybrid Cloud

For some use cases, a combination of clouds could provide the best solution. Using multiple public cloud vendors (multi cloud) for independent tasks and duties can provide redundancy and cost savings. The data centers and infrastructure can be spread out geographically to decrease the risk of service loss or disaster, and it makes sense financially to store the second or third copy of data with an additional vendor that offers a good and reliable service at a lower cost.

What is Hybrid cloud?

Hybrid cloud simply refers to the presence of multiple deployment types (public or private) with some form of integration or orchestration between them. The hybrid cloud differs from multi cloud in that in the hybrid cloud the components work together while in the multi cloud they remain separate. An organization might choose the hybrid cloud to have the ability to rapidly expand its storage or computing when necessary for planned or unplanned spikes in demand, such as occur during holiday seasons for a retailer, or during a service outage at the primary data center. We wrote about the hybrid cloud in a previous post, Confused About the Hybrid Cloud? You’re Not Alone.

Extending to the edge

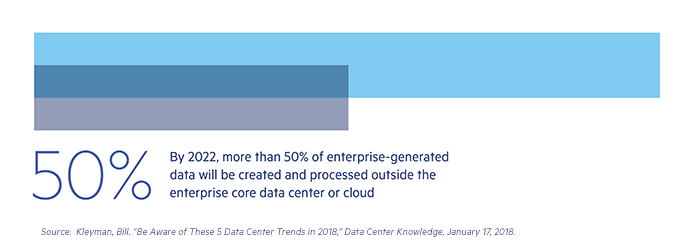

Gone are the days of choosing a data center based solely on the fact that that it is close to your company’s headquarters. Now, companies must operate ubiquitously and on-demand across all business functions and points of business presence no matter where customers, partners, and employees are geographically located. Therefore, Digital transformation isn’t slowing down any time soon, as IT architecture needs have been pushed out beyond traditional cloud services and data centers to the edge. Edge computing places dedicated resources closer to end users and devices which can free up core architecture and valuable network assets for other mission-critical tasks. As more and more use cases emerge, enterprises will continually seek the benefit of deploying copies of applications and data within proximity of the edge for performance and regulatory purposes. Gartner predicts that, by 2022, more than 50% of enterprise-generated data will be created and processed outside the enterprise core data center or cloud.

Similar to the law of gravitation in which all objects attract each other, data and applications can be viewed in a similar way. As the amount of data grows, it becomes more difficult to move since data and applications are attracted to one another. This can be referred to as “data gravity”. The more data gravity there is, the more applications and services are forced to move closer to the data. This is not ideal for organizations who want to have more control over moving and accessing their data whenever and wherever they want. Re-architecting towards a decentralized infrastructure removes data gravity barriers to accommodate distributed workflows. It will be paramount for IT leaders to securely connect and enable a pervasive data center platform that brings users, networks, applications, and cloud systems closer to the data.

Colocation solutions, coupled with a platform to connect environments to the networks, enterprise, content providers and cloud and IT providers needed in a business ecosystem, give businesses the flexibility to locate data center workload capabilities near the computing edge. This reduces latency, improves availability and reliability, and decreases costs. In a nutshell, whether data analytics, AI-enabled applications, high-performance applications, or IoT devices, colocation shrinks the distance and business requirements between computing resources and the edge.

Colocation Brings the Cloud and Edge to the Data

Today, end users have access to applications and data—in mere milliseconds—that were just a pipe dream only a few years ago. Business leaders can capture intelligence using IoT sensors and analyze that information in near real time that enable them to expand into new markets, streamline operations, and deliver unparalleled customer experiences. Talk about weaponizing business infrastructure!

This, as we mentioned above, requires a decentralized infrastructure that allows IT leaders everywhere to embrace the possibilities of everything from multi-cloud deployments to edge capabilities such as IoT. The traditional on-premises data center simply cannot deliver to the speeds and cost this new interconnected ecosystem demands. The third-generation of colocation services offers IT leaders the agile, cost-effective means to capitalize on these requirements. And with 65 percent of workloads still residing in on-premises data centers (versus the public cloud or in colocation facilities), much work is left to be done. Solving for the placement and housing of distributed data footprints combined with large scale computing between the cloud and the edge is of critical importance.

If you would like to engage with a Trusted Advisor that can advise you on the topics covered in this article, give our technical help desk a call at +1 (888) 711-3656 and we'll put you in contact with a trusted independent technology specialist in your area.

About the Author: Noah Woodburn is the Chief Cloud Correspondent for BizPhonics.com. He covers all of the recent developments in cloud computing, storage, and compliance. He's been all over the globe, attending conferences, talking to analysts, and measuring actual cloud utilization to piece together a comprehensive view of which clouds work best for which industries.